- Research

- Open access

- Published:

A q-Polak–Ribière–Polyak conjugate gradient algorithm for unconstrained optimization problems

Journal of Inequalities and Applications volume 2021, Article number: 25 (2021)

Abstract

A Polak–Ribière–Polyak (PRP) algorithm is one of the oldest and popular conjugate gradient algorithms for solving nonlinear unconstrained optimization problems. In this paper, we present a q-variant of the PRP (q-PRP) method for which both the sufficient and conjugacy conditions are satisfied at every iteration. The proposed method is convergent globally with standard Wolfe conditions and strong Wolfe conditions. The numerical results show that the proposed method is promising for a set of given test problems with different starting points. Moreover, the method reduces to the classical PRP method as the parameter q approaches 1.

1 Introduction

The conjugate gradient (CG) methods have played an important role in solving nonlinear optimization problems due to their simplicity of iteration and very low memory requirements [1, 2]. Of course, the CG methods are not among the fastest or most robust optimization algorithms for solving nonlinear problems today, but they are very popular among engineers and mathematicians to solve nonlinear optimization problems [3–5]. The origin of the methods dates back to 1952 when Hestenes and Stiefel introduced a CG method [6] for solving a symmetric positive definite linear system of equations. Further, Fletcher and Reeves modified the same method called FR [7] in the 1960s and developed a conjugate gradient method to solve unconstrained nonlinear optimization problems.

The conjugate gradient methods deflect the steepest descent method [8] by adding to it a positive multiple of the direction used in the previous step. They only require the first-order derivative and overcome the shortcomings of the slow convergence rate of the steepest descent method. By means of conjugacy, the conjugate gradient methods make the steepest descent direction to account for conjugacy and thus enhance the efficiency and reliability of the algorithm. Different conjugate gradient algorithms correspond to different choices of the scalar parameter \(\beta _{k}\) [6, 7, 9]. The parameter \(\beta _{k}\) is selected to minimize a convex quadratic function in a subspace spanned by a set of mutually conjugate descent directions, but the effectiveness of the algorithm depends on the accuracy of the line searches.

Quantum calculus, known as q-calculus, is the study of calculus without limits, where classical mathematical formulas are obtained as q approaches 1. In q-calculus, the classical derivative is replaced by the q-difference operator. Jackson [10, 11] was the first to have some applications of the q-calculus and introduced the q-analogue of the classical derivative and integral operators. Applications of q-calculus play an important role in various fields of mathematics and physics [12–20].

In 1969, Polak and Ribière [21] and Polyak [22] proposed a conjugate gradient method independently, later it was called Polak, Ribière, and Polyak (PRP) method. In view of the practical computation, the PRP method performed much better than the FR method for many unconstrained optimization problems because it automatically recovered once a small step length was generated, although the global convergence of the PRP method was proved only for the strictly convex functions [23]. For general nonlinear functions, Powell showed that the PRP method could cycle infinitely without approaching a solution even if the step-length was chosen to be the least positive minimizer of the line search function [24]. To change this unbalanced state, Gilbert and Nocedal [25] considered Powell’s suggestions [26] to modify the PRP method and showed that this modification of the PRP method is globally convergent for exact and inexact line searches.

In 2019, Yuan et al. proposed a new modified three-term conjugate gradient algorithm based on the modified Armijo line search technique [27]. After that in 2020, they designed a modified conjugate gradient method with a sufficient descent property and a trust region property [28]. The authors in [29] proposed the modified Hestenes–Stiefe (HS) conjugate gradient algorithm in order to solve large-scale complex smooth and nonsmooth optimization problems.

In 2020, Yuan et al. further proposed the PRP method and established the global convergence proof with the modified weak Wolfe–Powell line search technique for nonconvex functions. The numerical results demonstrated the competitiveness of the method compared to the existing methods. The engineering Muskingum model and image restoration problems were used to determine the interesting aspects of the given algorithm [30]. The generalized conjugate gradient algorithms were studied for solving large-scale unconstrained optimization problems within the real world applications, and two open problems were formulated [31–33].

The preliminary experimental optimization results using q-calculus were first shown in the field of global optimization [34]. The idea of this work is utilized in the stochastic q-neurons which are based on activation functions converted into the corresponding stochastic q-activation functions for improving the effectiveness of the algorithm. The q-gradient concept is further utilized in the least mean square algorithm to inherit the fast convergence property with less dependency on the eigenvalue of the input correlation matrix [35]. A modified least mean algorithm using q-calculus was also proposed which automatically adapted the learning rate with respect to the error and was shown to have fast convergence [36]. In optimization, the q-calculus was employed in Newton, modified Newton, BFGS, and limited memory BFGS methods for solving unconstrained nonlinear optimization problems [19, 37–40] with the least number of iterations. In the field of conjugate gradient methods, the q-analogue of the Fletcher–Reeves method was developed [41] to optimize unimodal and multimodal functions, and the Gaussian perturbations were used in some iterations to ensure the convergence globally in the probabilistic sense only.

In this paper, we propose a q-variant of PRP method, called q-PRP, with the sufficient descent property independent of the line searches and convexity assumption of the objective function. Under a condition on the q-gradient of the objective function and some other appropriate conditions, the proposed method is globally convergent. The numerical experiments are conducted to show the effectiveness of the q-PRP algorithm. For a set of given test functions with different starting points, it was able to escape from many local minima to reach global minima due to q-gradient.

The remainder of this paper is organized as follows: In the next section, we present the essential preliminaries. The main results are presented in Sect. 3, and their convergence proofs are given in Sect. 4. The numerical examples of the theoretical results are analyzed in Sect. 5. The paper is then ended with a conclusion and directions for future work.

2 Essential preliminaries

In this section, the principal terms of q-calculus are formed by assuming \(0< q<1\), as follows: The q-integer \([n]_{q}\) is defined by

for all \(n\in \mathbb{N}\). The q-analogue of \((1+x)_{q}^{n}\) is the polynomial given by

The derivative of \(x^{n}\) with respect to x is given by \([n]_{q}x^{n-1}\). The q-derivative \(D_{q}f\) of a function f is given by

if \(x\in 0\), and \(D_{q}f(0)=f'(0)\), provided \(f'(0)\) exists. Note that

if f is differentiable.

Example 2.1

Let the function \(f : \mathbb{R}\to \mathbb{R}\) be such that \(f(x)=\ln x\). Then, we have

It is obvious that the q-derivative of a function is a linear operator, that is, for any constant a and b, we have [42]

Let \(f(x)\) be a continuous function on \([a, b]\), where \(a, b \in \mathbb{R}\). Then, there exist \(\hat{q} \in (0, 1)\) and \(x \in (a,b)\) [43] such that

for all \(q \in (\hat{q}, 1) \cup (1, \hat{q}^{-1})\). The q-partial derivative of a function \(f : \mathbb{R}^{n} \to \mathbb{R}\) at \(x\in \mathbb{R}^{n}\) with respect to \(x_{i}\), where scalar \(q \in (0,1)\), is given as [34]

We now choose the parameter q as a vector, that is,

Then, the q-gradient vector [34] of f is

Let \(\{ q^{k}_{i} \}\) be a real sequence defined by

for each \(i=1,\ldots ,n\), where \(k=0,1,2,\ldots \) , and a fixed starting number \(0< q^{0}_{i} < 1\). Of course, the sequence \(\{q^{k}_{i}\}\) converges to \((1,\ldots , 1)\) as \(k \to \infty \) [38]. Thus, the q-gradient reduces to a classical derivative. For the sake of convenience, we represent the q-gradient vector of f at \(x^{k}\) as

Example 2.2

Consider the function \(f : \mathbb{R}^{2} \to \mathbb{R}\) defined by

Then, the q-gradient is given as

In the next section, we present the q-PRP method. To improve the efficiency, we utilize the q-gradient in inexact line search methods to generate the step-length which ensures the reduction of the objective function value.

3 On q-Polak–Ribière–Polyak conjugate gradient algorithm

Consider the following unconstrained nonlinear optimization problem:

where \(f: \mathbb{R}^{n} \to \mathbb{R}\) is a continuously q-differentiable function. The numerical optimization algorithms of general objective functions differ mainly in generating the search directions. In the conjugate gradient algorithms, a sequence of iterates is generated with a given starting point \(x^{0} \in \mathbb{R}^{n}\) by the following schema:

for all \(k\ge 0\), where \(x^{k}\) is the current iterate, \(d_{q^{k}}^{k}\) is a descent direction of f at \(x^{k}\) and \(\alpha _{k}>0\) is the step-length. Note that the descent direction \(d_{q^{k}}^{k} = -g_{q^{k}}^{k}\) leads to the q-steepest descent method [34]. In the case \(q^{k}\) approaches

as \(k\to \infty \), the method reduces to the classical steepest descent method [7]. The search direction \(d_{q}^{k}\) is guaranteed to have a descent direction due to the following:

The directions \(d_{q^{k}}^{k}\) are generated in the light of classical conjugate direction methods [7, 9, 21, 44, 45] as

where \(\beta _{k}^{q-\mathrm{PRP}}\in \mathbb{R}\) is modified from a scalar quantity \(\beta _{k}\) in the PRP method and presented as follows:

Some well-known conjugate gradient methods are available, such as FR (Fletcher–Revees) [7], PRP (Polak–Ribière–Polyak) [9, 21], and HS (Hestenes–Stiefel) [6] conjugate gradient method, respectively. Among these, the PRP method is considered the best in practical computation. In order to guarantee the global convergence, we choose \(d_{q^{k}}^{k}\) to satisfy the sufficient descent condition:

where \(c>0\) is a constant. There are several approaches to find the step-length. Among them, the exact line search [46, 47] is time consuming and sometimes difficult to carry out. Therefore, the researchers adopt the approaches of some inexact line search techniques such as Wolfe line search [48], Goldstein line search [49], or Armijo line search with backtracking [50]. The most used line search conditions for determining the step-length are the so-called standard Wolfe line search conditions:

and

where \(0<\delta <\sigma <1\). The first condition (7) is called the Armijo condition, which ensures a sufficient reduction of the objective function value, while the second condition (8) is called the curvature condition, which ensures nonacceptance of short step-length. To investigate the global convergence property of the PRP method, a modified Armijo line search method was proposed [51]. For given constants \(\mu >0\), \(\delta , \rho \in (0, 1)\), the line search aims to find

and

where \(0< C_{2}<1<C_{1}\) are constants. Accordingly, since \(\{ f(x^{k})\}_{k\ge 0}\) is a nonincreasing sequence, we have

Equivalently,

It is worth mentioning that a step-length computed by the standard Wolfe line search conditions (7)–(8) may not be sufficiently close to a minimizer of \((P)\). Instead, the strong Wolfe line search conditions can be used, which consist of (7) and, instead of (8), the following strengthened version:

is used. From (11), we see that if \(\sigma \to \infty \), then the step-length satisfying (7) and (11) tends to be the optimal step-length [2]. Note that appropriate choices for a starting point have a positive effect on computational cost and convergence speed of the algorithm. The modified PRP conjugate gradient-like method introduced by [52] is presented in the context of q-calculus as:

With the q-gradient, we can have a modification of [52] by taking

From (12) and (13) for \(k\ge 1\), we obtain

that is,

This implies that \(d_{q^{k}}^{k}\) provides a q-descent direction of the objective function at \(x^{k}\). It is worth mentioning that if exact line search [53] is used to compute the step-length \(\alpha _{k}\), then \(\theta ^{k}=0\), and \(q^{k}\to (1, 1,\ldots , 1)^{T}\) for \(k\to \infty \). Then, finally, the q-PRP method reduces to the classical PRP method.

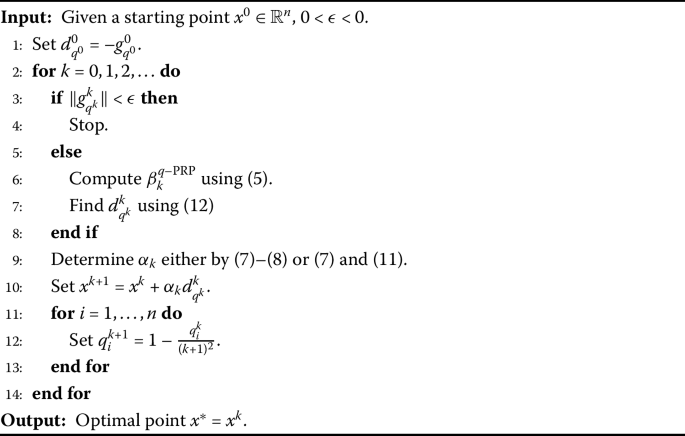

The number of steps taken by the algorithm to a large extent determines the number of iterations which always differs from one problem to another. Thus, we present the following Algorithm 1 to solve the problem \((P)\).

4 Global convergence

In this section, we prove the global convergence of Algorithm 1 under the following assumptions.

Assumption 4.1

The level set

is bounded, where \(x^{0}\) is a starting point.

Assumption 4.2

In some neighborhood N of Ω, f has a continuous q-derivative and there exists a constant \(L>0\) such that

for \(x, y \in N\).

Since \(\{f(x)\}\) is nonincreasing, it is clear that the sequence \(\{ x^{k} \}\) generated by Algorithm 1 is contained in Ω. From Assumptions 4.1 and 4.2, there is a constant \(\eta >0\) such that

for each \(x\in \Omega \). Based on Assumption 4.1, there exists a positive constant \(\mathcal{B}\) such that \(\lVert x\rVert \le B\), for all \(x\in \Omega \). Without any specification, let \(\{x^{k}\}\) and \(\{d_{q^{k}}^{k}\}\) be the iterative sequence and q-descent direction sequence generated by Algorithm 1. To this point, we present the following lemma.

Lemma 4.1

If there exists a constant \(\epsilon >0\), and \(\{q^{k}\}\) generated by (1) is such that

for all k, then there exists a constant \(\mathcal{M} > 0\) such that the q-descent direction satisfies

for all k.

Proof

From (12) and (16) for \(k\ge 1\), we obtain

Taking the norm of both sides of the above equation and using (16), we get

From Assumption 4.2 and (17), we have

From (10), \(\alpha _{k-1}d_{q^{k-1}}^{k-1}\to 0\) and since \(\{q^{k}\}\) approaches \((1,\ldots , 1)^{T}\) as \(k\to \infty \), there exist a constant \(s\in (0, 1)\) and an integer \(k_{0}\) such that the following inequality holds for all \(k\ge k_{0}\):

From (19), we get for any \(k>k_{0}\),

For k sufficiently large with \(s\in (0, 1)\), the second term of the above inequality can satisfy

Thus, we get

Choosing

thus we get (18). □

We now present that the modified q-PRP method with modified Armijo-type line search introduced by [51] due to the q-gradient is globally convergent.

Theorem 4.2

Assume that Assumptions 4.1and 4.2hold, then Algorithm 1 generates an infinite sequence \(\{x^{k}\}\) such that

Proof

For the sake of obtaining a contradiction, we suppose that the given conclusion is not true. Then, there exists a constant \(\epsilon >0\) such that

for all k. If \(\liminf_{ k \to \infty } \alpha _{k} > 0\), then from (10) and (14), we get

This contradicts the assumption (22). Suppose that \(\liminf_{k\to \infty }\alpha _{k}=0\), that is, there is an infinite index set \(\mathcal{K}\) such that

Suppose that step-9 of Algorithm 1 utilizes (9) to generate the step-length. When \(k\in \mathcal{K}\) is sufficiently large, and \(\rho ^{-1}\alpha _{k}\) for \(\rho \in (0 , 1)\) [52] does not satisfy (9), then we must have

From the q-mean value theorem, there is \(\gamma _{k} \in (0,1)\) such that

that is,

From Lemma 4.1 and Assumption 4.2, we have

where \(L>0\). From (22) and (23),

Using (14), we get

Since \(\{d_{q^{k}}^{k}\}\) is bounded and \(\lim_{k\in \mathcal{K}, k \to \infty } \alpha _{k}=0\),

This gives a contradiction. The proof is complete. □

The following important result introduced by Zoutendijk [54] can be expressed in the sense of q-calculus as follows:

Lemma 4.3

Suppose that Assumptions 4.1and 4.2hold. Consider the iteration methods (2) and (4), where \(d_{q^{k}}^{k}\) satisfies (3) and \(\alpha _{k}\) is obtained by standard Wolfe line search conditions (7)–(8) and strong Wolfe line search conditions (7) and (11). Then,

We now present the convergence analysis of Algorithm 1 with standard Wolfe conditions, which is a modification of [55, 56] in the sense of q-calculus. In this case, the step-lengths are bounded below by a positive constant.

Theorem 4.4

Assume that the line search fulfills the standard Wolfe conditions (7)–(8). If there exists a positive constant \(\alpha _{0}\in (0,1]\) such that \(\alpha _{k}\ge \alpha _{0}\) for all \(k\ge 0\), then

Proof

From (3) and the first Wolfe condition (7), we have

This means that the sequence \(\{f(x^{k})\}_{k\ge 0}\) is bounded. From the second standard Wolfe condition (8) and Assumption 4.2, we get

that is,

Post-multiplying both sides by \(\delta (g_{q^{k}}^{k})^{T}d_{q^{k}}^{k}\), we get

From the first standard Wolfe condition (7), we have

Since \(\{f(x^{k})\}_{k\ge 0}\) is bounded,

Thus, Zoutendijk condition (24) holds, that is,

From Assumption 4.1, there exists a constant \(\mathcal{B}\) such that

Since \(\alpha _{k}\ge \alpha _{0}\), we get

This, together with (6) and (26), leads to (25). □

We present the following theorem which is a modification of that in [57] using the q-gradient for q-PRP method with strong Wolfe conditions.

Theorem 4.5

Suppose that \(x^{0}\) is a starting point and Assumptions 4.1and 4.2hold. Let \(\{x^{k}\}\) be the sequence generated by Algorithm 1. If \(\beta _{k}^{q-\mathrm{PRP}}\) is such that the step-length \(\alpha _{k}\) satisfies the strong Wolfe conditions (7) and (11), then either

Proof

From (4), for all \(k\ge 1\), we have

Squaring both sides of the above equation, we get

Since \(d_{q^{k}}^{k}\) satisfies the descent condition \((g_{q^{k}}^{k})^{T} d_{q^{k}}^{k} < 0\),

Pre-multiplying (4) for \(k\ge 1\) by \(g_{q^{k}}^{k}\), we get

From (29) and the second strong Wolfe condition (11), one obtains

From the inequality

for all a, b, \(\sigma \ge 0\), with

and

we can express (30) as

where \(c_{1} = \frac{1}{(1+\sigma ^{2})}\). Note that

From (28) one gets

Using (31), we obtain

If (27) is not true, then from the Zoutendijk condition (24) with (32) we obtain the following inequality:

which holds for k sufficiently large with \(q^{k}\) approaching \((1,\ldots ,1)^{T}\). From (32) and (33), one immediately recovers (30). □

The following lemma immediately follows from the above convergence theorem.

Lemma 4.6

Suppose that Assumptions 4.1and 4.2hold and, from Algorithm 1, the step-length is determined using strong Wolfe conditions. If

for any \(r\in [0, 4]\), then the method converges in the sense that (27) is true.

Proof

If (27) is not true, then from Theorem 4.5, it follows that

Because \(\lVert g_{q^{k}}^{k} \rVert \) is bounded away from zero and \(r\in [0, 4]\), it is easy to see that (35) contradicts (34). Therefore, the lemma is true. □

The above inequality shows that if a conjugate gradient method fails to converge, then the length of the search direction will diverge to infinity. Observe that in the above developments, the sufficient descent condition is assumed. This lemma is very useful for proving the global convergence of some conjugate gradient methods without assuming the sufficient descent condition.

5 Numerical illustration

In this section, we investigate the computational efficiency of Algorithm 1 using standard Wolfe conditions (7) and (8), and strong Wolfe conditions (7) and (11), respectively, in contrast to the classical PRP method under the same two conditions.

All codes of Algorithm 1 and classical PRP method are written in R version 3.6.1 installed on a laptop having Intel(R) Core(TM) i3-4005U, 1.70 GHz CPU processor and 4 GB RAM. The iteration was set to terminate if it exceeded 1000 or the gradient of a function was within 10−6.

Example 5.1

Consider a function (Mishra 6) [58] \(f : \mathbb{R}^{2}\to \mathbb{R}\) given by

We find the q-gradient of the above function at the point

with the starting parameter value

We run the q-gradient algorithm [39] for \(k=1,\ldots ,50\) iterations so that \(q^{50}\) approaches

and in the 50th iteration we get the q-gradient

The complete computational details are given in Table 1 which is depicted graphically through Fig. 1. Note that Fig. 2 provides the three-dimensional view of Mishra 6 test function.

Graphical representation of the q-gradient of Mishra 6 function based on Table 1

Example 5.2

Consider a function \(f : \mathbb{R}^{2} \to \mathbb{R}\) given by

The Rosenbrock function, also called Rosenbrock’s valley or banana function, is a nonconvex, unimodal, and nonseparable function. Finding its global minimum numerically is difficult. It has only one global minimizer located at the point

with the search range \([-100, 100]\) for \(x_{1}\) and \(x_{2}\). For performing the experiment, we first generated 37 different starting points from the interval \([-5, 5]\) for the above Rosenbrock function. The numerical results are shown in Table 2 for Algorithm 1 and Table 3 for the classical PRP Algorithm. From these tables, we observe that the number of iterations \((NI)\) is smaller in the case of Algorithm 1 in comparison to the classical PRP method. The meanings of columns of both tables are well-defined. Figure 3 shows the results of comparisons in the number of iterations.

Example 5.3

Consider the following Rastrigin function \(f : \mathbb{R}^{2} \to \mathbb{R}\), that is,

The Rastrigin test function is a nonconvex, multimodal, and separable function, which has several local minimizers arranged in a regular lattice, but it has only one global minimizer located at the point

The search range for the Rastrigin function is \([-5.12, 5.12]\) in both \(x_{1}\) and \(x_{2}\). This function poses a fairly difficult problem due to its large search space and its large number of local minima. With a chosen starting point \((0.2, 0.2)^{T}\), we minimize this function through Algorithm 1 using strong Wolfe conditions. Note that q-PRP terminates in 5 iterations as

with step-length \(\alpha _{5}=0.252244535\). Thus, we get the global minimizer

with minimum function value

while running the classical PRP method using strong Wolfe conditions from the same chosen starting point, it terminates in 5 iterations with

\(\alpha _{5}=0.002547382\), but fails to achieve the global minimizer as

and

which are not true. This is one of the advantages of using the q-gradient in our proposed method over the classical method.

We now execute Algorithm 1 on a set of test functions taken from the CUTEr library [59] with 51 different starting points under standard and strong Wolfe conditions, respectively. Note that direction \(d_{q}^{k}\) generated by the proposed method is the q-descent direction due to the involvement of the q-gradient. Tables 4 and 5 list the numerical results of Algorithm 1 for 51 different starting points on a set of test problems, and Figs. 4 and 5 show this comparison graphically for both q-PRP and classical PRP methods under standard and strong Wolfe conditions, respectively. We conclude that our method is better than the classical method with the smaller number of iterations for the selected set of test problems.

6 Conclusion and future work

This paper proposed the q-PRP conjugate gradient method, which is an improvement of classical PRP conjugate gradient methods. The global convergence of the proposed method is established under the standard and strong Wolfe line searches. The effectiveness of the proposed method has been shown by some numerical examples. We find that the proposed method due to the q-gradient converges fast for a set of test problems with different starting points. The inclusion of q-calculus in other conjugate gradient methods deserves further investigation.

Availability of data and materials

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Mishra, S.K., Ram, B.: Conjugate gradient methods. In: Introduction to Unconstrained Optimization with R, pp. 211–244. Springer, Singapore (2019)

Andrei, N.: Nonlinear Conjugate Gradient Methods for Unconstrained Optimization. Springer, Berlin (2020)

Li, Y., Chen, W., Zhou, H., Yang, L.: Conjugate gradient method with pseudospectral collocation scheme for optimal rocket landing guidance. Aerosp. Sci. Technol. 104, 105999 (2020)

Liu, J., Du, S., Chen, Y.: A sufficient descent nonlinear conjugate gradient method for solving m-tensor equations. J. Comput. Appl. Math. 371, 112709 (2020)

Yuan, G., Li, T., Hu, W.: A conjugate gradient algorithm for large-scale nonlinear equations and image restoration problems. Appl. Numer. Math. 147, 129–141 (2020)

Hestenes, M.R., Stiefel, E., et al.: Methods of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. 49(6), 409–436 (1952)

Fletcher, R., Reeves, C.M.: Function minimization by conjugate gradients. Comput. J. 7(2), 149–154 (1964)

Mishra, S.K., Ram, B.: Steepest descent method. In: Introduction to Unconstrained Optimization with R, pp. 131–173. Springer, Singapore (2019)

Polak, E., Ribiere, G.: Note sur la convergence de méthodes de directions conjuguées. ESAIM: Math. Model. Numer. Anal. 3(R1), 35–43 (1969)

Jackson, F.H.: On q-functions and a certain difference operator. Earth Environ. Sci. Trans. R. Soc. Edinb. 46(2), 253–281 (1909)

Jackson, D.O., Fukuda, T., Dunn, O., Majors, E.: On q-definite integrals. Q. J. Pure Appl. Math. 41, 193–203 (1910)

Ernst, T.: A method for q-calculus. J. Nonlinear Math. Phys. 10(4), 487–525 (2003)

Awan, M.U., Talib, S., Kashuri, A., Noor, M.A., Chu, Y.-M.: Estimates of quantum bounds pertaining to new q-integral identity with applications. Adv. Differ. Equ. 2020, 424 (2020)

Samei, M.E.: Existence of solutions for a system of singular sum fractional q-differential equations via quantum calculus. Adv. Differ. Equ. 2020, 23 (2020). https://doi.org/10.1186/s13662-019-2480-y

Liang, S., Samei, M.E.: New approach to solutions of a class of singular fractional q-differential problem via quantum calculus. Adv. Differ. Equ. 2020, 14 (2020). https://doi.org/10.1186/s13662-019-2489-2

Ahmadian, A., Rezapour, S., Salahshour, S., Samei, M.E.: Solutions of sum-type singular fractional q-integro-differential equation with m-point boundary value problem using quantum calculus. Math. Methods Appl. Sci. 43(15), 8980–9004 (2020). https://doi.org/10.1002/mma.6591

Samei, M.E., Hedayati, H., Rezapour, S.: Existence results for a fraction hybrid differential inclusion with Caputo–Hadamard type fractional derivative. Adv. Differ. Equ. 2019, 163 (2019). https://doi.org/10.1186/s13662-019-2090-8

Samei, M.E., Ranjbar, G.K., Hedayati, V.: Existence of solutions for equations and inclusions of multiterm fractional q-integro-differential with nonseparated and initial boundary conditions. J. Inequal. Appl. 2019, 273 (2019). https://doi.org/10.1186/s13660-019-2224-2

Mishra, S.K., Panda, G., Ansary, M.A.T., Ram, B.: On q-Newton’s method for unconstrained multiobjective optimization problems. J. Appl. Math. Comput. 63, 391–410 (2020)

Lai, K.K., Mishra, S.K., Ram, B.: On q-quasi-Newton’s method for unconstrained multiobjective optimization problems. Mathematics 8(4), 616 (2020)

Polyak, B.T.: The conjugate gradient method in extremal problems. USSR Comput. Math. Math. Phys. 9(4), 94–112 (1969)

Polyak, B.T.: The conjugate gradient method in extremal problems. USSR Comput. Math. Math. Phys. 9(4), 94–112 (1969)

Yuan, Y.: Numerical Methods for Nonlinear Programming. Shanghai Sci. Technol., Shanghai (1993)

Powell, M.J.: Nonconvex minimization calculations and the conjugate gradient method. In: Numerical Analysis, pp. 122–141. Springer, Berlin (1984)

Gilbert, J.C., Nocedal, J.: Global convergence properties of conjugate gradient methods for optimization. SIAM J. Optim. 2(1), 21–42 (1992)

Powell, M.J.: Convergence properties of algorithms for nonlinear optimization. SIAM Rev. 28(4), 487–500 (1986)

Yuan, G., Li, T., Hu, W.: A conjugate gradient algorithm and its application in large-scale optimization problems and image restoration. J. Inequal. Appl. 2019, 247 (2019) https://doi.org/10.1186/s13660-019-2192-6

Yuan, G., Li, T., Hu, W.: A conjugate gradient algorithm for large-scale nonlinear equations and image restoration problems. Appl. Numer. Math. 147, 129–141 (2020). https://doi.org/10.1016/j.apnum.2019.08.022

Hu, W., Wu, J., Yuan, G.: Some modified Hestenes–Stiefel conjugate gradient algorithms with application in image restoration. Appl. Numer. Math. 158, 360–376 (2020). https://doi.org/10.1016/j.apnum.2020.08.009

Yuan, G., Lu, J., Wang, Z.: The PRP conjugate gradient algorithm with a modified WWP line search and its application in the image restoration problems. Appl. Numer. Math. 152, 1–11 (2020). https://doi.org/10.1016/j.apnum.2020.01.019

Yuan, G., Wei, Z., Yang, Y.: The global convergence of the Polak–Ribière–Polyak conjugate gradient algorithm under inexact line search for nonconvex functions. J. Comput. Appl. Math. 362, 262–275 (2019)

Yuan, G., Wang, X., Zhou, S.: The projection technique for two open problems of unconstrained optimization problems. J. Optim. Theory Appl. 186, 590–619 (2020)

Zhang, M., Zhou, Y., Wang, S.: A modified nonlinear conjugate gradient method with the Armijo line search and its application. Math. Probl. Eng. 2020, 6210965 (2020)

Soterroni, A.C., Galski, R.L., Ramos, F.M.: The q-gradient vector for unconstrained continuous optimization problems. In: Operations Research Proceedings 2010, pp. 365–370. Springer, Berlin (2011)

Sadiq, A., Usman, M., Khan, S., Naseem, I., Moinuddin, M., Al-Saggaf, U.M.: q-LMF: quantum calculus-based least mean fourth algorithm. In: Fourth International Congress on Information and Communication Technology, pp. 303–311. Springer, Berlin (2020)

Sadiq, A., Khan, S., Naseem, I., Togneri, R., Bennamoun, M.: Enhanced q-least mean square. Circuits Syst. Signal Process. 38(10), 4817–4839 (2019)

Chakraborty, S.K., Panda, G.: q-line search scheme for optimization problem. arXiv preprint, arXiv:1702.01518 (2017)

Chakraborty, S.K., Panda, G.: Newton like line search method using q-calculus. In: International Conference on Mathematics and Computing, pp. 196–208. Springer, Berlin (2017)

Lai, K.K., Mishra, S.K., Panda, G., Chakraborty, S.K., Samei, M.E., Ram, B.: A limited memory q-BFGS algorithm for unconstrained optimization problems. J. Appl. Math. Comput. (2020). https://doi.org/10.1007/s12190-020-01432-6

Lai, K.K., Mishra, S.K., Panda, G., Ansary, M.A.T., Ram, B.: On q-steepest descent method for unconstrained multiobjective optimization problems. AIMS Math. 5(6), 5521–5540 (2020)

Gouvêa, É.J., Regis, R.G., Soterroni, A.C., Scarabello, M.C., Ramos, F.M.: Global optimization using q-gradients. Eur. J. Oper. Res. 251(3), 727–738 (2016)

Aral, A., Gupta, V., Agarwal, R.P., et al.: Applications of q-Calculus in Operator Theory. Springer, New York (2013)

Rajković, P., Stanković, M., Marinković, S.D.: Mean value theorems in g-calculus. Mat. Vesn. 54(3–4), 171–178 (2002)

Dai, Y.-H., Yuan, Y.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10(1), 177–182 (1999)

Fletcher, R.: Practical Methods of Optimization, vol. 80, 4. Wiley, New York (1987)

Nocedal, J., Wright, S.: Numerical Optimization. Springer, New York (2006)

Mishra, S.K., Ram, B.: Introduction to Unconstrained Optimization with R. Springer, Singapore (2019)

Wolfe, P.: Convergence conditions for ascent methods, II: some corrections. SIAM Rev. 13(2), 185–188 (1971)

Goldstein, A.A.: On steepest descent. J. Soc. Ind. Appl. Math., A, on Control 3(1), 147–151 (1965)

Armijo, L.: Minimization of functions having Lipschitz continuous first partial derivatives. Pac. J. Math. 16(1), 1–3 (1966)

Grippo, L., Lucidi, S.: A globally convergent version of the Polak–Ribiere conjugate gradient method. Math. Program. 78(3), 375–391 (1997)

Zhang, L., Zhou, W., Li, D.-H.: A descent modified Polak–Ribière–Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. 26(4), 629–640 (2006)

Mishra, S.K., Ram, B.: One-dimensional optimization methods. In: Introduction to Unconstrained Optimization with R, pp. 85–130. Springer, Boston (2019)

Zoutendijk, G.: Nonlinear programming, computational methods. In: Integer and Nonlinear Programming, pp. 37–86 (1970)

Yuan, G.: Modified nonlinear conjugate gradient methods with sufficient descent property for large-scale optimization problems. Optim. Lett. 3(1), 11–21 (2009)

Aminifard, Z., Babaie-Kafaki, S.: A modified descent Polak–Ribiére–Polyak conjugate gradient method with global convergence property for nonconvex functions. Calcolo 56(2), 16 (2019)

Dai, Y., Han, J., Liu, G., Sun, D., Yin, H., Yuan, Y.-X.: Convergence properties of nonlinear conjugate gradient methods. SIAM J. Optim. 10(2), 345–358 (2000)

Mishra, S.K.: Global optimization by differential evolution and particle swarm methods: evaluation on some benchmark functions. Available at SSRN 933827 (2006)

Gould, N.I.M., Orban, D., Toint, P.L.: CUTEr (and SifDec), a constrained and unconstrained testing environment, revisited. ACM Trans. Math. Softw. 29, 373–394 (2003)

Acknowledgements

The first author was supported by the Science and Engineering Research Board (Grant No. DST-SERB-MTR-2018/000121). The third author was supported by Bu-Ali Sina University. The fourth author was supported by the University Grants Commission (IN) (Grant No. UGC-2015-UTT-59235). Constructive comments by the referees are gratefully acknowledged.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

The authors declare that the study was realized in collaboration with equal responsibility. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mishra, S.K., Chakraborty, S.K., Samei, M.E. et al. A q-Polak–Ribière–Polyak conjugate gradient algorithm for unconstrained optimization problems. J Inequal Appl 2021, 25 (2021). https://doi.org/10.1186/s13660-021-02554-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-021-02554-6